I'm Jin, a Ph.D. candidate in Physics and Biology in Medicine at UCLA, specializing in Vision-Language Foundation models and Medical Image Analysis. My research focuses on building AI that can understand and reason about visual information, with applications in computer vision, spatial reasoning, and medical imaging.

With experience spanning from leading automation projects at ABB Korea to conducting cutting-edge research at UCLA, I combine technical expertise with practical problem-solving skills. My recent work at UCLA's Computer Vision and Imaging Biomarkers Lab focuses on self-supervised learning, foundation model adaptation, and Vision-Language models for medical applications.

Research Interests

Vision-Language Models

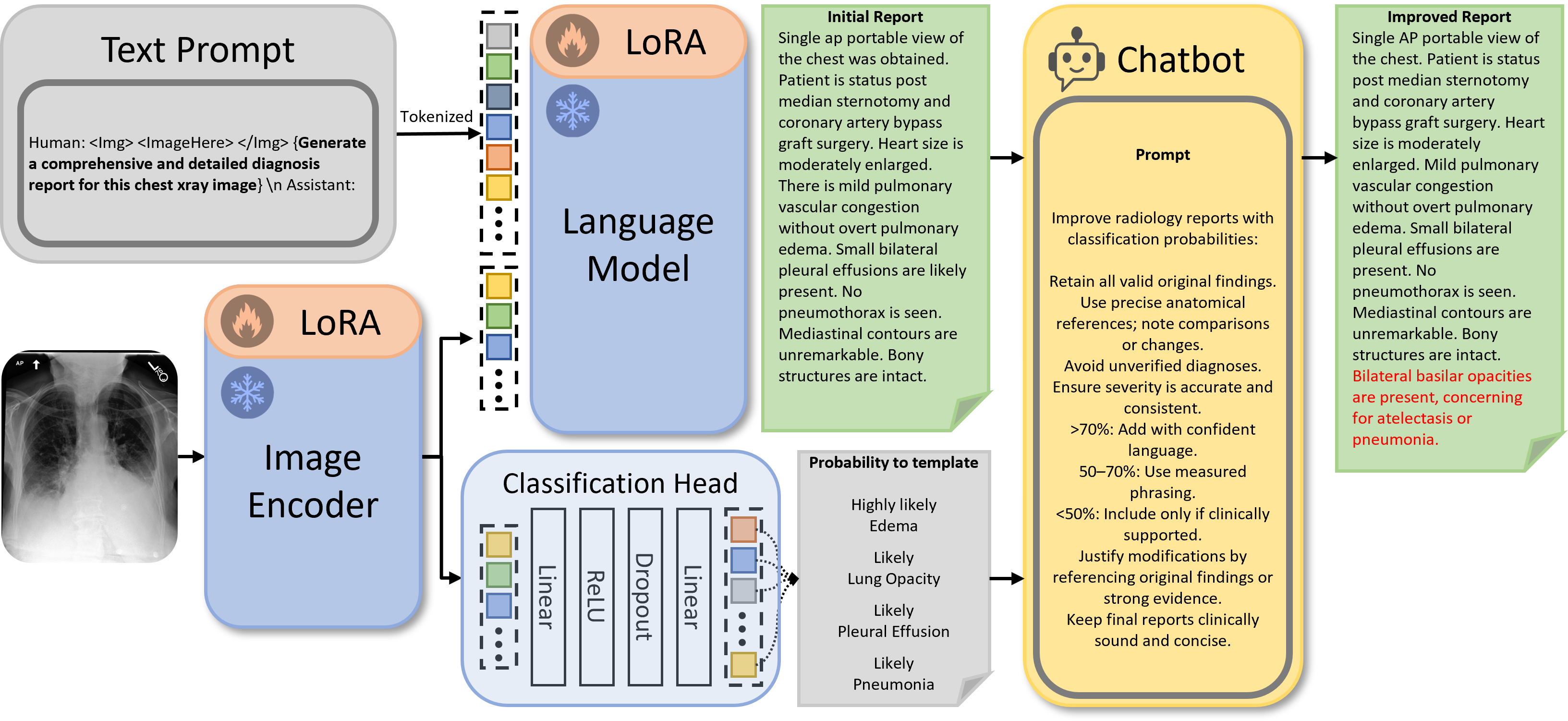

Vision-language foundation models combining visual and textual understanding for medical applications. Working on parameter-efficient fine-tuning strategies using knowledge distillation and pseudo-labeling techniques validated in challenging segmentation tasks. Recent work includes dual-path radiology report generation accepted at MICCAI 2025 Workshop and textual reasoning for affordance coordinate extraction in multimodal environments. Research direction outlined in PhD dissertation proposal.

Medical Image Analysis

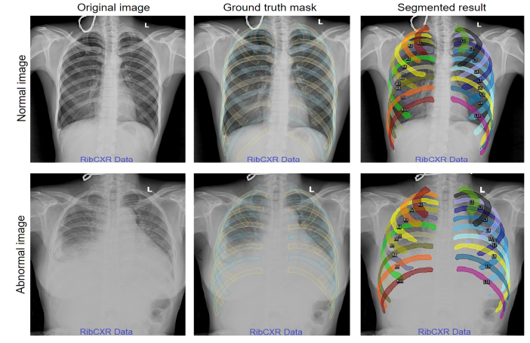

Self-supervised learning and foundation models addressing data scarcity challenges in computer vision. Developing methods to handle limited annotated data through innovative pretraining approaches and domain adaptation techniques. Core expertise demonstrated in PhD qualifying examination on foundation models and knowledge distillation. Recent presentations include foundation models with deep layer adapters at RSNA 2024 and self-supervised learning for chest X-ray segmentation at SPIE 2024.

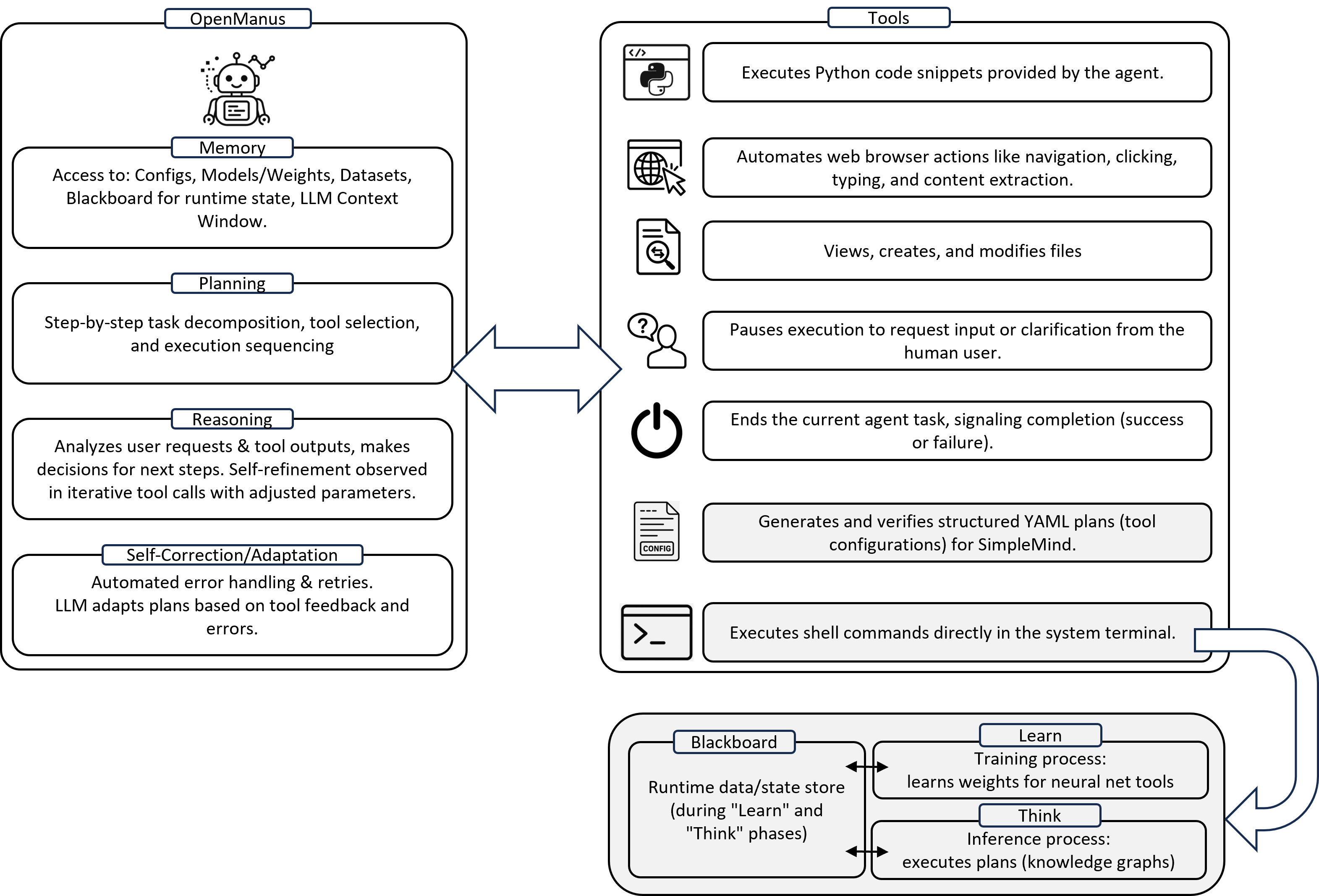

Agentic AI

Autonomous computer vision development with AI agents for spatial reasoning and multimodal understanding. Focus on spatial precision with articulated reasoning and autonomous system development. Recent work includes autonomous computer vision development published on arXiv.

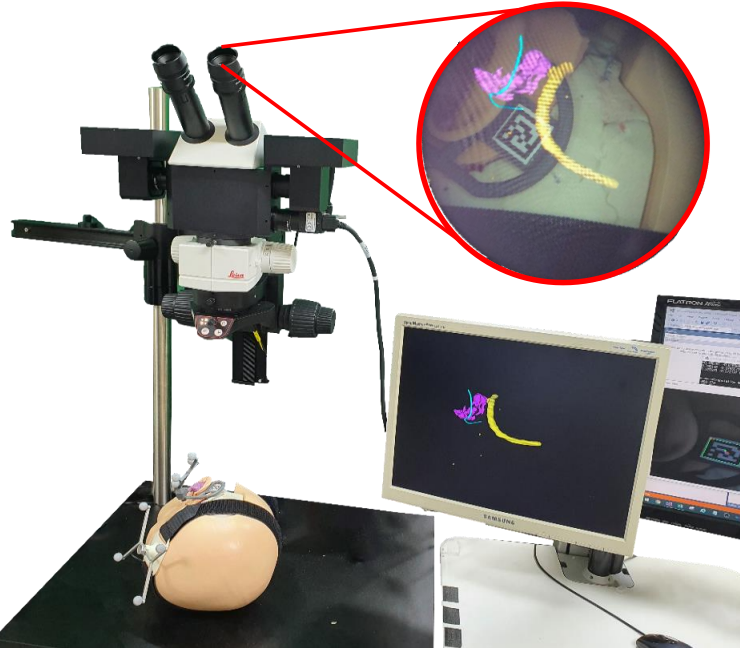

Foundational Work: Surgical Navigation

Previous research in AR-enhanced navigation systems for precise surgical procedures, particularly in ENT applications. This foundational work includes AI-Enhanced AR Navigation System for Precise Mastoidectomy from my Master's thesis, which established core competencies in medical imaging that inform current Vision-Language model research.

Recent News

- [Nov 2025] 📄 Paper submitted to arXiv - "TRACE: Textual Reasoning for Affordance Coordinate Extraction"

- [Nov 2025] 📝 Paper submitted to SPIE Journal of Medical Imaging - "Selective Segmentation with Rejection Option: A Machine Reasoning Approach and Evaluation Metrics in CT Kidney Segmentation"

- [Oct 2025] 🎉 Paper accepted at ICCV 2025 Workshop - "SPAR: Spatial Precision with Articulated Reasoning"

- [Sep 2025] 🎉 Paper accepted at ICCV 2025 Workshop - "TRACE: Textual Reasoning for Affordance Coordinate Extraction"

- [Jul 2025] 📄 Paper accepted at MICCAI 2025 Workshop on MLLMs in Clinical Practice - "Dual-path Radiology Report Generation"

Education

University of California, Los Angeles

GPA: 3.84/4.0

Qualifier: Leveraging Foundation Models, Knowledge Distillation, and Pseudo-Labeling for Robust Lung Segmentation in Computed Tomography Scans

Proposal: Vision-Language Modeling for Medical Image Analysis

Seoul National University

GPA: 3.68/4.3

Thesis: Development of an AI-Enhanced AR Navigation System for Precise Mastoidectomy

Yeungnam University

Merit-based scholarship (70% tuition waiver), GPA: 3.95/4.5 (Major)

Experience

University of California, Los Angeles

Computer Vision and Imaging Biomarkers Lab

Advisors: Prof. Matthew S. Brown & Prof. Dan Ruan

Seoul National University

Medical Image Innovation Laboratory

Advisor: Prof. Won-Jin Yi